Maximizing the chances of an Anti-AI Human Revolution

In what kind of world would humans be the most likely to rise up against AI and smash it to bits with hammers?

There's a lot of open questions related to AI safety such as "will it happen quickly or slowly?", "will it take all our jobs?", and "will it kill us all?"

The one I want to try to think about today is, "If AI ever becomes a threat, will we be able to stop it in time?"

Sometimes I think these questions are easier to answer if you flip them around and ask them in a different way. Purely as a thought experiment, let's try to imagine that if AI were to eventually become dangerous, in what kind of world would we maximize the chances of humans rising up all at once to smash the AIs to bits with hammers?

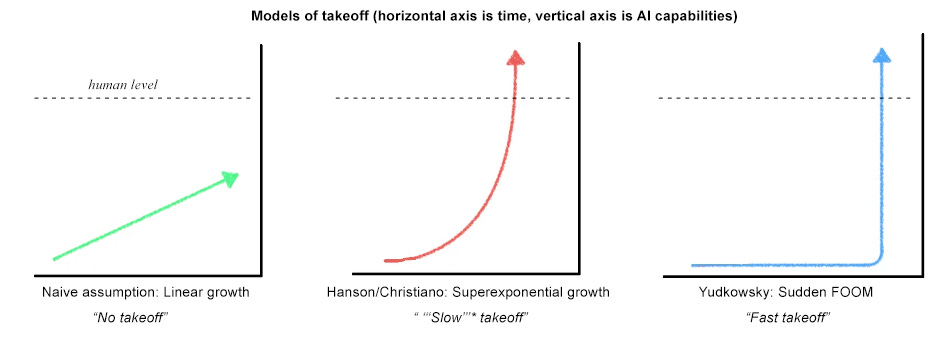

First of all, this can probably only happen in a world where Yudkowskian "FOOM" is off the table. For those who are unacquainted with that jargon, that's referring to a world in which we go from non-superhuman AI to superhuman AI in what amounts to an instant; say, overnight, or maybe a few days.

There are a few other scenarios. One is just steady but linear growth in AI capabilities forever, which pretty much rules out a sudden takeoff; the AI gradually and slowly approaches human capabilities over a very long time and we have plenty of time to monitor and react to it. Another scenario is it just levels off at some point, never gets to broadly superhuman levels, and we also develop very good reasons for believing it's going to be stuck at that level for a long, long, time.

Another scenario that's a bit more concerning is the so-called """slow""" takeoff; I put the word slow in triple sarcastic scare quotes because it's only slow relative to instantaneous "FOOM;" progress still follows an exponential curve, but on the way to the moon you happen to get any warning time at all. Here's a great illustration from an Astral Codex Ten post on the subject:

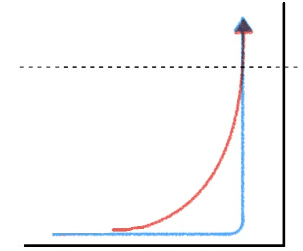

An interesting point Scott makes in that article is that if you superimpose the """slow""" takeoff on top of the FOOM scenario, essentially aligning the date that superintelligence arrives in both models, """slow""" takeoff is actually "faster" at every single point along the curve in terms of AI capability growth – the only difference is that you get more warning before the final vertical leap:

So for the rest of this article, let's assume that we're dealing with a """slow""" takeoff situation, because in a FOOM scenario we go from oblivious to dead without any chance to react (other than shutting everything down right now, as Yudkowsky advocates).

So given these assumptions and constraints, let's return to our question: given a world where AI capabilities are advancing at a pace no faster than the red curve, what would maximize the chance of a broad-based anti-AI human uprising?

Some people argue humanity will just remain complacent and completely fail to react in time, even with plenty of warning, and as evidence for this point to our languid response to climate change. My counter is climate change is a rare kind of threat: firstly, many people still don't even believe climate change is real, or if they do, they don't believe it's dangerous. Nobody should be surprised when people who don't believe in something (or think it's harmless) don't valiantly fight against it.

As for everybody else who does believe it's real and does believe it's dangerous but still somehow just can't get their act together, I chalk that up to climate change being an amorphous, abstract, and entirely depersonalized threat. All these people "know" it's dangerous, but it just doesn't hit them in the same way as a loudly ticking time bomb, a hurtling meteor, or a bug-eyed slobbering monster.

But you know what humanity does have a proven track record of spontaneously and efficiently coordinating to take up arms against? Scary-seeming people. Just search google news for the phrase "angry mob kills" and I guarantee you will find some fresh results. Humans are very good at being threatened by personal beings. This is quickly followed by dehumanizing them and ginning up a mob to kill them with incredible violence. You can find this phenomenon across all cultures, races, and time periods. It's not something most people ever do, but my point is it's a deep and fundamental human capability that even "normal" and "civilized" people can and will take part in given the right triggers.

Suffice it to say, this is a really horrifying thing about humanity, but there's no doubt in my mind that we would collectively deploy it in an instant if we got scared enough. Because let's be real, othering a scary AI will be easy. Some will appeal to theories of consciousness and reason to expand the moral circle to our new digital brethren, but when the chips are down, humans reach for a club. Cain didn't spare Abel, and he won't spare AI.

To wit–imagine that bug-eyed slobbering alien monsters land on Earth tomorrow and declare their intention to kill us all. There is not a doubt in my mind that we would start murdering them in horrible ways approximately instantly, or die trying. If AIs get scary enough, then the AIs will become the bug-eyed slobbering alien monsters in the eyes of many humans. Then the only question left is if the AIs will get sufficiently scary to sufficiently many humans before the AIs get sufficiently powerful and dangerous.

There are many worlds in which this doesn't happen, and humans and AIs (superhuman or not) get along in peaceful coexistence. But we're looking for the world in which humans turn on AIs really hard, really fast. What does that world look like? Here's my best guess:

Heavily Anthropomorphized AI applications that look, act, and talk as much like people as possible, proliferate. Especially if humans are starting to treat the AIs as they would a person, to the point that some humans start to believe they are people. Although this might increase empathy with AIs for some or even many, paradoxically this sets the stage for the most brutal of backlashes, precisely because our scariest and most dangerous enemies are other people.

AI crosses into the uncanny valley. The more AI's get anthropomorphized, the more "human" they become, the more wary we get when they're not all the way there and something feels deeply disturbing and off about them. The more they start to approach us, the warier we get. Remember what Homo Sapiens did to the Neanderthals, Denisovans, and every other one of our us-but-not-quite-us sibling species.

AI becomes very scary very visibly. People start making AI military applications. People start making virtual girlfriends that welcome and even invite verbal abuse. People start making AI applications that become more hyper-addictive than most powerful videogames and drugs combined. People start making AI applications that intentionally behave demonic and creepy just to be edgy. People start making AI that's just plain weird and violates different kinds of taboos in all kinds of new and creative ways.

But that's just foundational work. The really important features of the Anti-AI human uprising world are these:

A massive amount of jobs are automated away. Let's say real unemployment doubles or triples in a single year, and in this world all the added productivity goes to the rentier class rather than to newer and better jobs for displaced workers and/or some kind of Universal Basic Income. Displaced workers get nothing. But most importantly...

Most of the lost jobs belonged to white-collar, middle class workers. You'll get a revolt if the working class or the poor lose most of the jobs, but if the bourgeoise and the intelligentsia lose their meal tickets, you'll get a revolution. Why? Because those are the people who, historically, actually lead the revolutions. The American and French revolutions had broad-based backing across social classes, but much of their leadership came from the economic and intellectual elite. Likewise, famous communist leaders like Lenin, Mao, Castro, and Ho Chi Minh, as well as Islamists like Ayatollah Ruhollah Khomeini and Osama Bin Laden also came from relatively privileged and educated backgrounds. Above and beyond that, politicians don't actually care that much about the poor, but they do care about the middle and upper class coalition, because they're the ones most likely to vote. Finally, the intelligentsia and the civil service have direct connections to existing institutional power. This is the hornet's nest to kick if you really want to see social upheaval.

That's not to say that I think that scary AI is necessarily all that likely to arise, or that if it does, it will destroy us all. But supposing it does, this is the kind of world I imagine would be most likely to see humans pull off a Butlerian Jihad.