Fixing Steam's User Rating Charts

Recently I wrote a big article about the new changes in Steam, and rendered my judgment: cautiously optimistic.

After further reflection, my position is unchanged -- most of it's very good, but some things need work.

NOTE: Although my tone throughout is quite critical, make no mistake, I am a huge fan of the New Steam. I just see many ways to make it even better!

User Ratings

Today I'm going to turn a critical eye on what I see as the hidden lynch-pin of the entire system: user ratings. It's not known to what degree or in what manner user review scores factor in to what shows up on Steam's customized front pages and discovery queues, but there is one crucial piece of evidence right out in the open -- the "sort by user review" function on the search page.

If you go to the Steam homepage and click on the search icon without typing anything in, you get a list of everything on Steam and various ways to narrow/filter your search.

The default option is to sort by "relevance", which I assume is powered by Steam's algorithmic recommendation engine. However, there's also an option to sort by "User Review Score."

It's broken.

How Not To Sort

Sorting a bunch of different items by average user rating is a well-understood problem, with my favorite solution being Evan Miller's, which uses statistical sampling to properly compare items with large differences in the number of reviews.

By contrast, here's Steam's sorting algorithm:

- Sort all the games on Steam into different semantic buckets:

- "Overwhelmingly Positive"

- "Positive"

- "Mixed"

- etc.

- Sort by bucket, then internally sort each bucket by the sheer number of user reviews per game.

This means that games with a 99% score and 32,000 ratings (Portal 2) appear below a game with 95% score and 38,000 ratings (Tripwire Bundle). Surely we have enough samples to confidently assert that Portal 2 is more highly rated?

There's more. Because everything is sorted first by semantic bucket, you don't even see a mere "positive" game until page 68:

Here's the Problem.

Steam's user ratings are massively skewed towards the positive (source):

According to @SteamCharts, the median value falls in the "Very Positive" bucket, the second one from the right. That means that half of the games on Steam are rated "Very Positive" or higher!

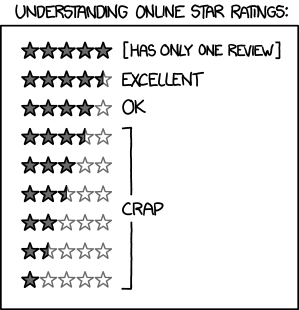

I can't help but think of this XKCD comic:

Steam's naive sorting method wouldn't be terrible if we had a normal distribution of user scores, where most games were in the middle and just a few were at either extreme. But given the positive skew, it's basically just taking the top half of the games on Steam and sorting them according to NUMBER of user reviews, which is virtually indistinguishable from a top selling chart.

Now, there's nothing wrong with a "sort by popularity" search ranking -- sometimes you just want to find stuff that's already popular! However, it should be clearly labeled as such. Fortunately, the solution is simple.

My recommendation:

Rename "sort by user rating" to "sort by popularity"

Add a true "sort by user rating" option, using Evan Miller's method

Here's an example of how Evan Miller's method would affect the rankings of the top 25 games on Steam:

Portal 2 would shoot up 17 ranks and Counter-Strike GO would drop by 19! Note that I only re-sorted the top 25 search results. If I had done this test with the entire set of games on Steam instead of just the top 25, the results might be different still, as some statistically signficant 99% scores might be lurking around rank 100 or so.

Now, "big deal" you might say -- the new ranking system just reshuffles the big popular games in a slightly different way. Well, look what happens when you narrow by tag. Here's everything tagged under the (loosely-defined) "RPG" category:

Note that Bastion would become #3 in its category, vaulting above 7 AAA titles and even dethrone the "2D MineCraft" heavyweight, Terraria.

The best part about Miller's method (based on a Wilson score confidence interval) is that it is self-correcting.

It answers the question:

What minimum score rating am I 95% confident this title would receive if everybody on the system were to rate it?

This means that it is inherently skeptical of any game with a small number of reviews and tries to err on the low side. Like all statistical methods, it has a small error margin (5%), so games with a relatively low number of scores could ocassionally vault above their "true" position in the charts. However, that would immediately increase their visibility, in turn increasing the number of reviews, providing enough data to move the title to its true position.

By contrast, the current ranking system leads to the popular becoming more popular -- once you're on the top charts, you have increased visibility, which leads to more reviews, which further cements your chart position (as long as you stay inside your semantic rating bucket).

Those of us who want to discover hidden gems really need the search functionality to work with us, not against us. We want a system where the top charts are self-correcting, rather than self-reinforcing. Otherwise we get a situation like Apple's with frozen charts, shady tactics, and skyrocketing user acquisition costs.

And heaven help us if the "user rating" component of Steam's magical new recommendation engine (the invisible hand that designs customized front pages and discovery queues) is using the same naive ranking method.

Alright, the current ranking algorithm gives short shrift to a statistically significant 98% game with less reviews than a more popular 95% game. But what about games that are merely "mostly positive"?

Turns out they get absolutely buried.

Digging Deeper

By poring through the search results, I've got a pretty good idea about how Steam calculates the semantic buckets (I'm quite confident about the positive buckets, a little less about the negatives):

- 95 - 99% : Overhwelmingly Positive

- 94 - 80% : Very Positive

- 80 - 99% + few reviews: Positive

- 70 - 79% : Mostly Positive

- 40 - 69% : Mixed

- 20? - 39% : Mostly Negative

- 0 - 39% + rew reviews: Negative

- 0 - 19% : Very Negative

- 0 - 19% + many reviews: Overwhelmingly Negative

Notice that "positive" and "negative" in particular are calculated differently from the other buckets -- it's anything high or low but with very few samples. This leads to really weird results, as "mostly positive" reviews don't even show up until page 141, underneath titles with only a single review:

How does a title with a single thumbs up deserve a higher rank than one with over 11,000 positive affirmations?

This further strenghtens the case for Evan Miller's sorting method, but it brings up other concerns.

Give me a chance

1. Under Judgment

As I argued in my last article, there really needs to be some kind of "firehose" or "under judgment" filter, where ALL new titles are put on a special page where they are guaranteed some minimum amount of visibility. Until they have achieved a certain "critical mass" number of reviews, they are not assigned to any semantic bucket and no information about their review score is revealed. This prevents the absurd situation where a game's initial chart placement is determined by the first random dork to post a review.

2. Subjectivity

If the whole point of NewSteam is to do targetted recommendations, what if I only care about certain kinds of user reviews? If there's a new Action/RPG that's out, and Action players hate it but RPG players love it, maybe I only care what the RPG players think. So even if it gets a "50% - mixed" score overall, in my book it could actually be a "98% - overwhelmingly positive?".

I'm not entirely sure how to account for things like this, and it's beyond the scope of this article, but it bears mentioning. Netflix and Kongregate have explored this issue at length, and Steam could learn a lot from them.

3. Better Charts Everywhere

Everywhere there's a chart on Steam, we need better sorting options. For instance, if you go to the Strategy Games tag page, right now you can only see "New Releases", "Top Sellers", and "Specials." There should be at least two more tabs -- "Top Rated" and "Recommended for you."

So those are my concerns about how Steam deals with it's user ratings. Hopefully I've written something useful here that might be of assitance to the hard-working folks at Valve!

EDIT: I should probably mention in the interests of full disclosure that Defender's Quest would stand to benefit from this change.